Cybersecurity operations are reaching a critical inflection point. Threat actors leverage automation, ML-driven reconnaissance, and adaptive TTPs (tactics, techniques, and procedures) to exploit enterprise environments faster than most security teams can react. Meanwhile, SOCs are buried under alert volume, struggling with alert fatigue, incomplete telemetry, fragmented tooling, and chronic resource constraints.

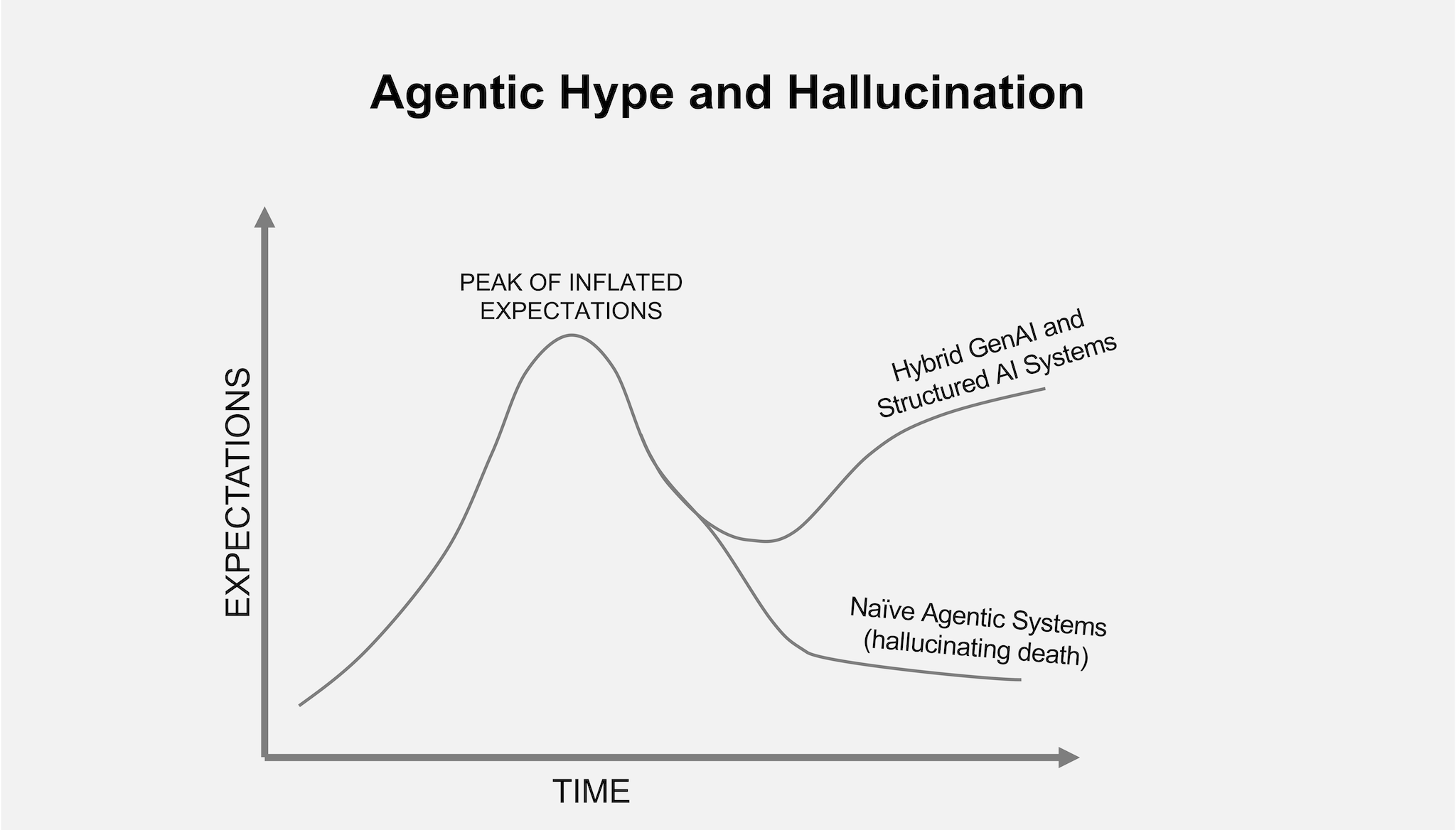

Traditional AI and automation have helped alleviate some of this burden, mainly through enrichment, classification, and correlation. But they fall short of what’s needed in today’s dynamic, high-stakes threat environment.

Enter Agentic AI: a new class of AI systems purpose-built for autonomy, context awareness, and multi-step decision-making. Unlike rule-based automation or narrow AI agents, agentic AI can operate with intent, continuously adapt to novel conditions, and execute defensive workflows end-to-end, with minimal human oversight.

What is Agentic AI?

Agentic AI refers to advanced AI systems designed for autonomous, adaptive, and goal-directed behavior in complex, evolving environments.

Unlike traditional AI that relies on predefined rules or manual prompts, agentic AI systems can independently manage long-term objectives, dynamically orchestrate tools and sub-agents, and make context-sensitive decisions using persistent memory and real-time telemetry.

These systems are engineered to understand business objectives, break down tasks into manageable components, learn from past outcomes, and continuously course-correct without constant human oversight.

In cybersecurity, agentic AI enhances threat detection and automated response, ensuring faster and more effective protection against evolving threats.

Core Characteristics of Agentic AI

| Trait | Description |

| Autonomy | Executes multi-step operations aligned with security objectives—without human prompting. |

| Adaptability | Learns from feedback loops, shifts tactics based on evolving threat or system state. |

| Context Awareness | Incorporates real-time business and technical context into decisions (e.g., blast radius, asset criticality). |

| Tool Orchestration | Integrates with detection, response, and management tooling—calls APIs, runs playbooks, initiates actions. |

| Persistent Memory | Maintains internal state across interactions and workflows to support continuity and goal tracking. |

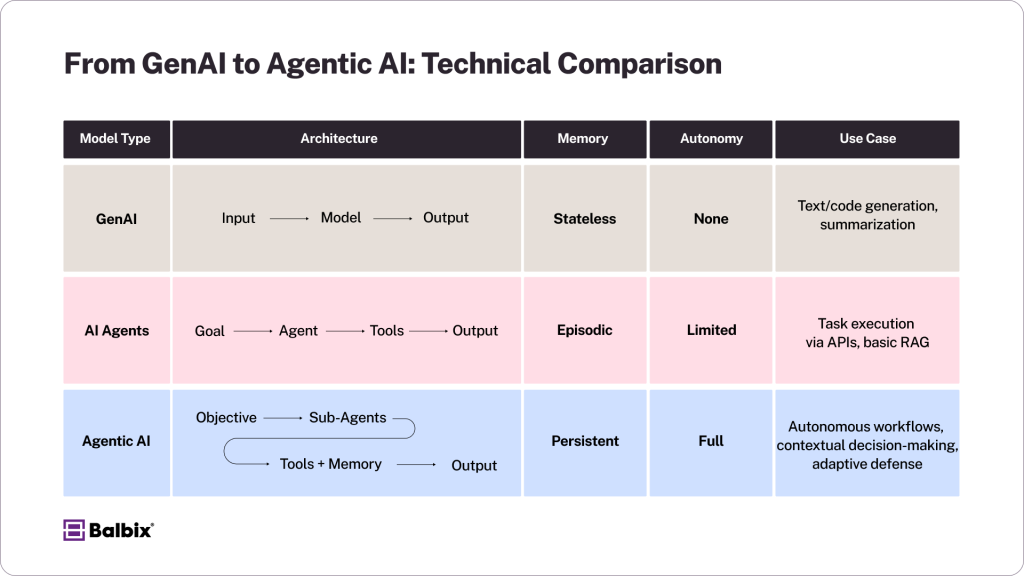

GenAI vs. AI Agents vs. Agentic AI

As AI capabilities advance, there’s a clear progression in architecture and autonomy from simple prompt-based models to fully autonomous systems capable of managing complex, multi-step workflows. Let’s dive into how the three differ in the same universe:

Generative AI (GenAI)

Flow: Input → Model → Result

GenAI systems (like large language models) generate text or code in response to prompts. They’re stateless and lack agency—output is entirely driven by the input, with no goal persistence or task planning.

AI Agents

Flow: Goal → Agent → Tools → Output

Agents are task-oriented systems that invoke tools (e.g., APIs, web search, code execution) to complete a specified goal. They exhibit some autonomy but are often tightly scoped and remain prompt-dependent. Most agents rely on a single execution loop and lack persistent memory or dynamic planning.

Agentic AI

Flow: Objective → Sub-Agents → Tools + Memory → Output

Agentic AI systems are designed for autonomous, multi-step operations. They decompose objectives into subtasks, coordinate sub-agents, use external and internal tools, and leverage short- and long-term memory. These systems exhibit planning, adaptability, and initiative, allowing for long-horizon task execution with minimal human input.

Agentic AI Memory Architectures & Lifecycle

While agentic AI can maintain persistent memory, how this memory is implemented and managed is crucial for effective performance. There are two main types of memory: ephemeral memory and persistent memory.

Ephemeral memory is used for short-term decision-making, whereas persistent memory is crucial for long-term learning and goal tracking. Persistent memory can be modeled using vector embeddings, high-dimensional representations of knowledge that are dynamically updated as new data arrives.

However, persistent memory introduces challenges regarding context window management and privacy concerns. For example, an agent might forget certain details or behaviors after a set period to comply with data privacy regulations like GDPR. Managing the lifecycle of memory—deciding when to discard outdated knowledge or adjust its representation—requires careful governance. Without proper management, hallucinations (inaccurate inferences from outdated data) or error propagation (when small mistakes accumulate over time) are risks.

Single-Agent vs. Multi-Agent Agentic Systems

Architecture plays a critical role in agentic AI design, with one of the key decisions being whether to implement a single-agent or multi-agent system.

Single-Agent Systems

In a single-agent system, a single autonomous agent manages an entire workflow from start to finish. This agent has access to the necessary tools, memory, and context, and is responsible for planning, executing, and adapting tasks. The benefits of this approach include simpler coordination logic, faster decision cycles, and easier auditing and debugging.

However, single-agent systems have limitations, particularly regarding scalability for complex tasks and the potential for bottlenecks when managing multiple objectives simultaneously.

Multi-Agent Systems

On the other hand, multi-agent systems delegate tasks to a collection of specialized agents that work together toward a common goal. These agents can focus on specific domains, such as vulnerability analysis, threat correlation, or containment, and communicate through a central orchestrator or peer-to-peer mechanisms.

The advantages of multi-agent systems include greater modularity and specialization, scalability for complex or distributed environments, and fault tolerance, where the failure of one agent does not disrupt the entire system. However, this approach requires robust coordination and communication protocols to ensure smooth operation and prevent conflicts or redundancy between agents.

In cybersecurity, multi-agent architectures are especially powerful. For instance, one agent might detect abnormal identity behavior, another could assess exposure impact, while a third initiates containment actions—all operating in parallel, with shared context and memory. This design enables agentic AI to act swiftly and intelligently across diverse attack surfaces.

Goal and Task Management Frameworks

Agentic AI systems decompose complex objectives into sub-tasks, orchestrate tools, and track dependencies. However, this process requires an efficient task management framework to ensure smooth execution. Task decomposition is often powered by planning models like Hierarchical Task Networks (HTNs), which break down high-level goals into smaller, manageable tasks. Additionally, Planning Domain Definition Language (PDDL) can be used to model actions, goals, and constraints in a formalized way.

Task dependencies and real-time conditions must also be tracked, which is done via a dynamic task management system. Goal-oriented architecture helps ensure that agentic AI can re-prioritize tasks or adjust plans in real time as new data is introduced. This continuous re-evaluation is crucial for environments like cybersecurity, where conditions change rapidly.

Security of Agentic AI Systems

Agentic AI systems must be secured to prevent adversaries from targeting them. Since agentic AI has autonomy and persistent memory, attackers could attempt to manipulate agent actions or exfiltrate sensitive data.

To secure these systems, consider these proactive measures

- Sandboxing and Privilege Separation: Agentic AI must operate within isolated environments to prevent unauthorized access or misuse of resources. Privilege separation ensures agents only have access to the specific tools and data necessary for their tasks, minimizing the damage from a potential breach.

- Prompt Injection Protection: Agents rely on external inputs (e.g., telemetry, threat intelligence), which can be spoofed or manipulated. Ensuring agents validate incoming data integrity is critical to prevent malicious redirection of their actions.

- Auditability and Logging: Every action taken by an agent should be logged for transparency and accountability. This includes interactions with external APIs, decisions made, and tools invoked. These logs are crucial for compliance and debugging.

How Agentic AI Works in Cybersecurity

1. Autonomous Operation

Agentic AI can coordinate actions like validating anomalies, cross-checking threat intel, isolating hosts, launching forensic tasks, notifying IR teams, and recommending fixes when integrated with systems like EDR, SOAR, and threat intelligence platforms. These capabilities depend on secure API access, strong orchestration logic, and careful governance to ensure safe, accurate decision-making in high-stakes environments.

Tool Use and Interoperability

Agentic AI operates by invoking external tools and services to achieve its objectives. These tools often include APIs, threat intelligence platforms, and SOAR solutions. Effective tool orchestration is key to agent performance, as it allows agents to seamlessly interact with these tools to gather data, take action, and adapt to new information.

When integrating external tools, there are several technical considerations:

- API Calls and Security: Agents use APIs to interact with various tools. These APIs must be secured, typically through mechanisms like OAuth or API tokens, to ensure unauthorized agents cannot gain access.

- Tool Wrappers: Many agents use frameworks (e.g., LangChain) to abstract tool interaction, ensuring a consistent method of invoking and managing tools. This helps in handling tool failures or retries.

- Response Validation: The outputs from tools must be validated before they are used in decision-making. Agents must implement error-checking and fallback mechanisms to avoid acting on incorrect or incomplete data.

2. Contextual Decision-Making

Smarter, risk-centric decision-making becomes possible when AI agents move beyond static methods like CVSS. When integrated with asset data, business context, and threat intelligence, it can weigh factors such as external exposure, application criticality, historical exploitability, and potential blast radius. This enables dynamic prioritization based on actual risk, replacing traditional alert triage with decisions tailored to an organization’s unique environment.

3. Learning Over Time

Agentic AI can maintain persistent memory and learn over time, depending on how the system is architected. Many current implementations simulate memory using tools like vector databases (e.g., Pinecone, Weaviate), rather than possessing native long-term memory. To be effective, memory must be intentionally designed and governed to avoid issues like hallucinations, error propagation, or privacy risks.

4. Collaborative Workflows

Instead of replacing analysts, these systems enhance their effectiveness by handling repetitive operational tasks. Connected to tools like ServiceNow, Jira, and threat intelligence platforms, they can draft root cause summaries, generate remediation tickets, prioritize vulnerabilities by business and technical risk, and escalate only the most critical events. This allows human teams to shift their focus to strategic efforts like threat hunting, adversary simulation, and architecture planning.

Human-in-the-Loop Configurations

While agentic AI reduces the need for direct human intervention, having a human-in-the-loop (HITL) for high-risk actions is often beneficial. For example:

- Approval Gates: Agents can request human confirmation before taking drastic actions like isolating a critical host or shutting down a network segment. This ensures that decisions are aligned with organizational strategy and risk tolerance.

- Feedback Loops: Analysts can provide feedback to agents to refine their decision-making. For instance, if an agent mistakenly categorizes a low-risk event as high priority, analysts can correct it, allowing the AI to adjust its future behavior.

- Audit Trails: For compliance reasons, it’s important to maintain comprehensive logs of all agent actions and human interactions. This ensures accountability and allows security teams to review actions post-incident.

Why Cybersecurity Demands Agentic AI

As adversaries increasingly rely on automated tools like C2 frameworks and AI-generated payloads to scale their attacks, traditional static defenses struggle to keep pace. Agentic AI systems, however, can react in milliseconds, quickly correlating data across multiple attack vectors and taking decisive action.

Additionally, alert overload remains a significant bottleneck in Security Operations Centers (SOCs), with analysts overwhelmed by noise. Agentic AI cuts through this clutter, prioritizing threats by impact and escalating only when necessary.

With limited human resources, agentic AI handles repetitive, time-sensitive tasks, freeing analysts to focus on advanced investigations and strategic mitigation. This allows cybersecurity teams to stay ahead in an environment where speed and precision are crucial.

Operational Monitoring of Agentic AI

To ensure the long-term effectiveness of agentic AI, organizations should actively monitor the health and performance of these systems. Key operational metrics include:

- Task Success Rate: Tracks how often agents complete tasks as expected.

- Error Rate and Drift: Measures how often an agent encounters issues or fails to meet objectives and any divergence from expected behavior.

- Time-to-Resolution: Tracks how quickly agents can complete tasks once initiated, helping teams identify areas for performance improvement.

Building an observability stack, which includes real-time monitoring, logging, and dashboards, helps security teams monitor agentic AI performance. This enables proactive maintenance and fine-tuning of the system to maintain optimal decision-making accuracy.

What’s Next?

By moving beyond static defenses and rule-based automation, agentic AI empowers security teams to work faster, smarter, and more adaptively. Its ability to dynamically respond to evolving threats and make context-driven decisions strengthens risk reduction and enhances organizational resilience.

From Assistive to Adaptive: The Future of AI-Native Cyber Defense

Agentic AI marks the shift from assistive automation to adaptive intelligence in cybersecurity. Where traditional AI aids analysts, agentic AI begins to reason and respond like one — continuously learning, contextualizing, and improving decisions across the cyber risk lifecycle.

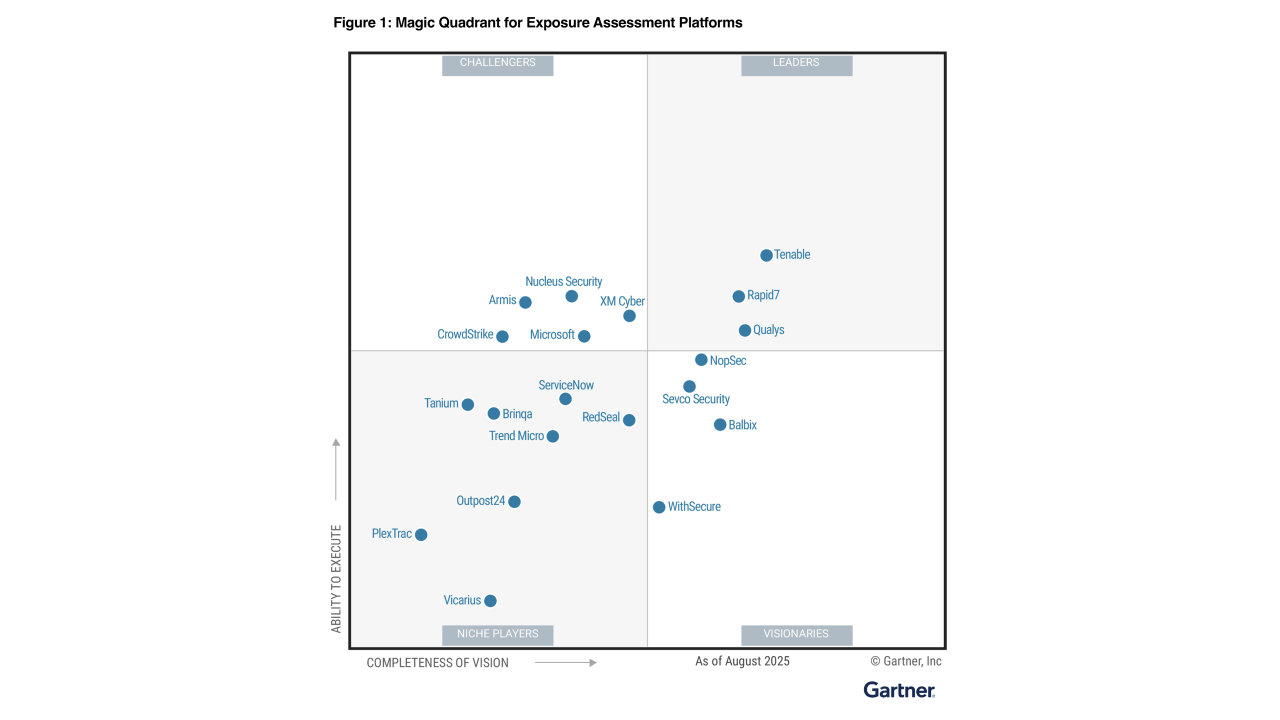

At Balbix, we’re shaping this evolution today. Our AI-native exposure management platform combines hundreds of specialized models that function as an agentic ecosystem — correlating telemetry, prioritizing risk, quantifying financial impact, and recommending next actions with explainability and context. Each subsystem operates like a purpose-built agent:

- The Balbix Brain interprets and contextualizes trillions of signals from vulnerabilities, identities, misconfigurations, and threat intel.

- BIX Copilot transforms these insights into clear, goal-aligned recommendations for every role — from analyst to CISO.

- CRQ engines simulate “what-if” outcomes and continuously recalibrate exposure priorities based on changing conditions.

Together, these elements create an adaptive defense loop — detect, decide, act, and learn — helping security teams move from alert fatigue to augmented precision.

Agentic AI isn’t about replacing humans; it’s about amplifying them.

The destination isn’t full autonomy — it’s explainable, context-aware intelligence that evolves with the enterprise and the threat landscape.

Balbix is leading that journey.

? Request a demo to see how agentic AI enhances exposure management, risk quantification, and decision velocity.

Frequently Asked Questions

- What is agentic AI in cybersecurity?

-

Agentic AI in cybersecurity refers to autonomous, adaptive AI systems that can make context-aware decisions, orchestrate tools, and execute multi-step defensive workflows with minimal human input. Unlike traditional automation, agentic AI continuously learns, plans, and reacts in real time to evolving threats.

- How is agentic AI different from generative AI or traditional AI agents?

-

Generative AI (like large language models) creates content from prompts but lacks memory or initiative. Traditional AI agents can complete defined tasks using tools but are typically limited to single loops. Agentic AI goes further—it maintains persistent memory, adapts over time, and autonomously manages complex, long-horizon cybersecurity operations.

- Why do security teams need agentic AI today?

-

Traditional defenses can’t keep up with threat actors using automation and AI-driven tactics. Agentic AI helps by filtering alert noise, prioritizing real risks, automating responses, and reducing manual effort. This allows analysts to focus on high-value tasks while improving threat response speed and accuracy.

- Can agentic AI replace human analysts in the SOC?

-

No, agentic AI is designed to augment, not replace, human analysts. It handles repetitive and time-sensitive tasks, surfaces only high-impact issues, and supports collaborative workflows. Human oversight remains vital for strategic decisions, tuning AI behavior, and validating outcomes.

- How does agentic AI manage memory and learn over time?

-

Agentic AI combines ephemeral (short-term) and persistent (long-term) memory. Persistent memory is often implemented through vector databases, enabling agents to retain knowledge across tasks. Learning over time helps these systems improve decisions, though memory must be governed to avoid hallucinations and data drift.

- What are the security risks of using agentic AI systems?

-

Agentic AI systems must be protected against risks like prompt injection, privilege misuse, and data exfiltration. Key safeguards include sandboxing, strict access controls, validation of external data, and detailed audit logs to ensure transparency and resilience against attacks.

- How do multi-agent agentic AI systems work in cybersecurity?

-

Multi-agent systems divide complex workflows across specialized agents—like one for detection, one for impact analysis, and another for containment. These agents collaborate through shared context and memory, allowing for scalable, modular, and fault-tolerant threat response across the enterprise.