This is the second piece of my three part blog on the topic of the nature of human intelligence vs AI, understanding the vocabulary in use today including the difference between AI, machine learning, expert systems and deep learning, and how AI is being used successfully to address various problems in cybersecurity.

In the first part of the blog, I offered a general definition of intelligence. We differentiated between the notions of General AI (which does not exist today) and Narrow AI (which does).

Digging in.

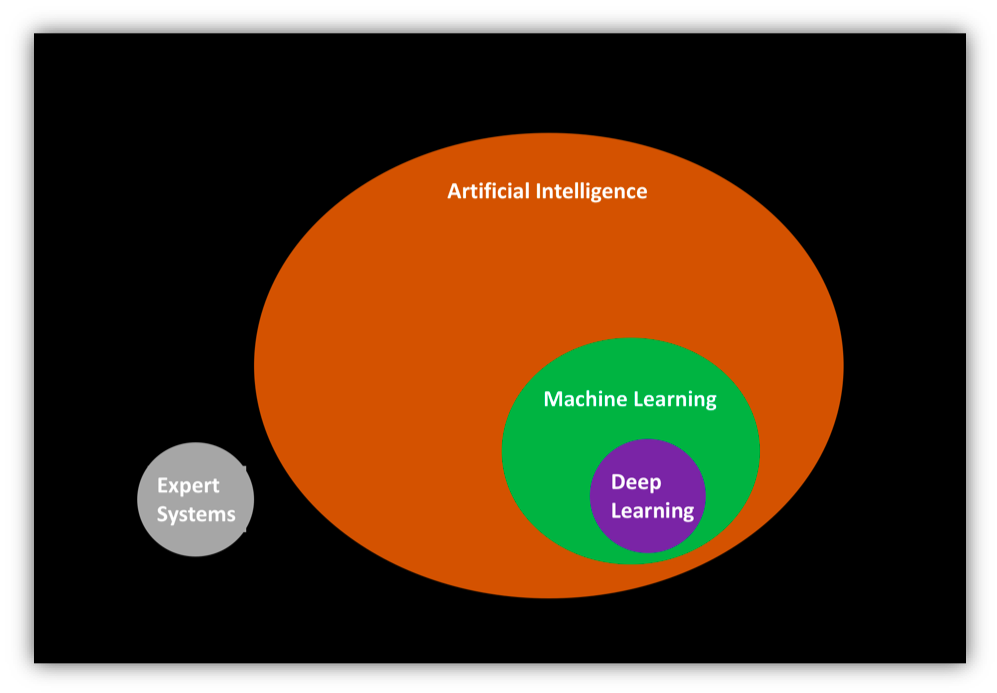

The Difference between AI, Machine Learning, Expert Systems and Deep Learning

Machine Learning (ML) is the application of induction algorithms, a first step in the process of knowledge acquisition, and grew out of the quest for artificial intelligence in the 1960s. ML focuses on algorithms that can be said to “learn”. Rather than writing a specific set of computer instructions to accomplish a task, the machine is “trained” using large amounts of data to give it the ability to learn how to perform the task. Training samples maybe externally supplied or supplied by a previous stage of the knowledge discovery process.

Several Machine Learning techniques have been developed over the years, including decision trees, inductive logic programming, clustering, Bayesian networks, and artificial neural networks. ML is closely related to and overlaps with computational statistics.

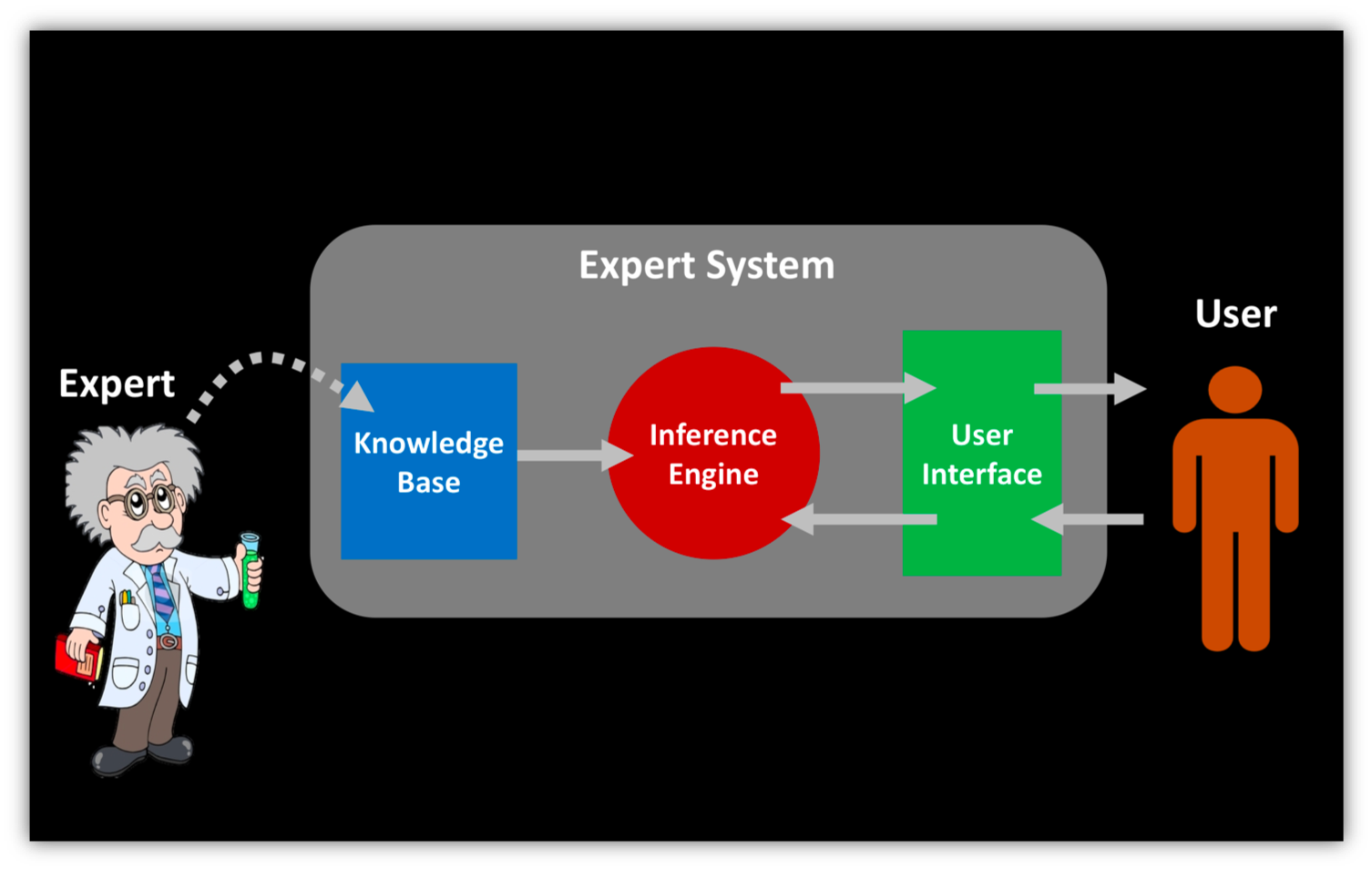

Machine Learning is considered separate and distinct from Expert Systems which solve problems by fuzzy rules-based reasoning through carefully curated bodies of knowledge (rules). Expert Systems were touted as the most successful example of AI in the 1980s. The idea behind Expert Systems is that intelligent systems derive their power from the knowledge they have rather than from the specific inference schemes that they use. In a nutshell, Expert Systems have knowledge, but don’t quite learn on their own. They always need human programmers or operators to make them smarter. In our definition of intelligence, they are not very intelligent.

Coming back to systems that learn. Machine Learning is hard because correlating patterns across multi-dimensional data is a hard problem. It is very data and compute intensive. The human brain constantly takes in an enormous amount of sensory data from a large number of sources and across numerous dimensions for many years, slowly perfecting its models, before it achieves the intelligence and expertise that you would associate with skilled (adult) members of your cyber-security team. Just try an imagine the amount of training data (labelled and unlabaled) that went into the brain of a typical college graduate. More often than not, appropriate training data to feed to ML systems is quite scarce, and as a result, ML programs fail to deliver accurate results.

Artificial Neural Networks and Deep Learning: In recent years, we have seen significant advances in one type of ML, called Deep Learning, which is an evolution of an early ML approach- Artificial Neural Networks, which was inspired by the structure of the human brain. In a Neural Network, each node assigns a weigh to its input which represents how correct or incorrect it is relative to the operation being performed. The final output is then determined by the sum of such weighs. Practical neural networks have many layers each of which corresponds to the various sub-tasks of the complete operation being performed by the neural network.

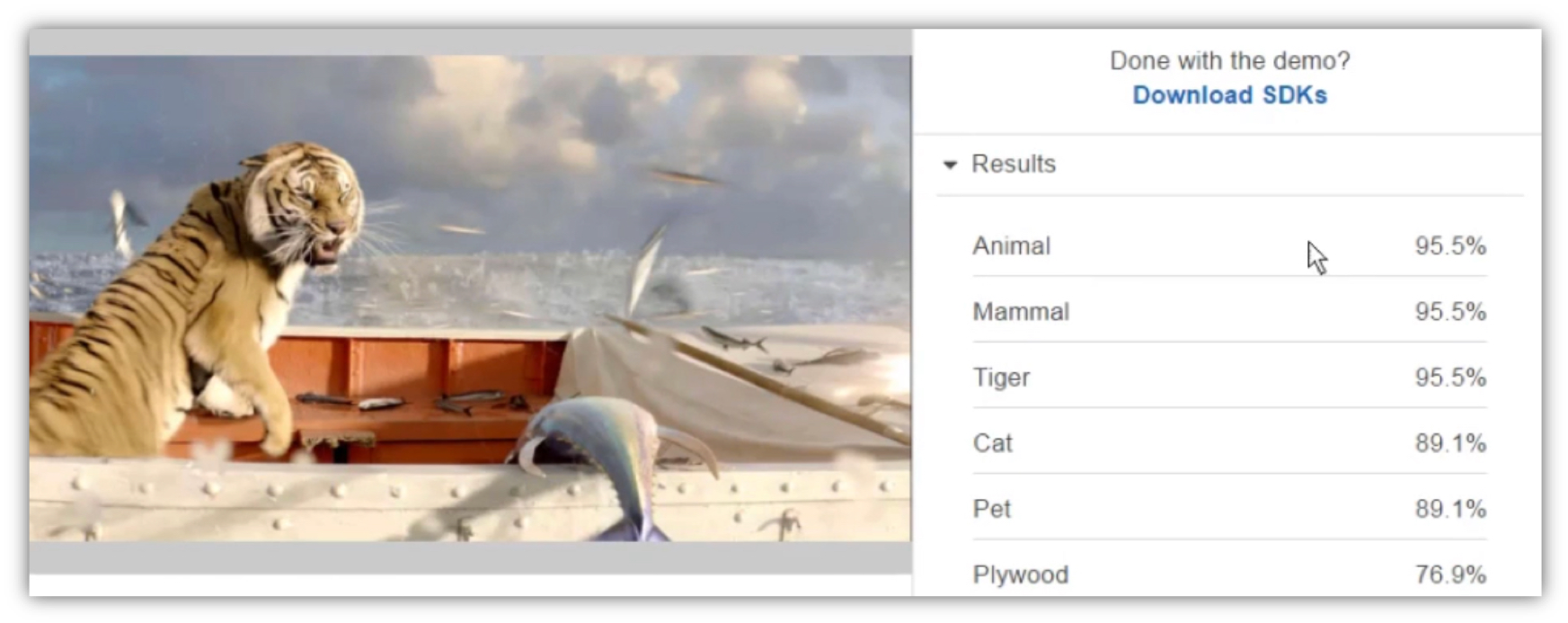

The output of a neural network reports is in the form of a “probability vector”, which might say, for example, that the system is 90% confident that an image contains given an animal and 25% confident that the animal is a crocodile.

Up until quite recently, the study of neural networks had produced little in the way of what you might call “intelligence.” The confidence of the predictive output was low, and therefore not very useful. As you might imagine, the fundamental problem was even the most basic neural networks were very computationally intensive and it just was not practical to build and use neural networks for any reasonably complex task. A small research group led by Geoffrey Hinton at the University of Toronto kept working on the problem, finally parallelizing the algorithms for powerful supercomputers and proved the concept.

To understand this difficulty, let’s borrow an example from the field of computer vision and autonomous cars: the problem of recognizing a traffic stop sign. It is quite likely that as the stop sign detecting neural network is getting trained, it is coming up with a lot of incorrect answers. For example, it might be able to do a good job in good visibility conditions, but not fare very well in bad weather. This network needs a lot of training. It needs to see hundreds of thousands, perhaps even millions of images, until the weighs of the various neuron inputs are tuned absolutely perfectly, and it gets the answer right practically every time, no matter what the conditions be— fog or sun or rain. It’s at that point that we might say the neural network has learnt what a stop sign looks like.

This is exactly what Andrew Ng did in 2012 at Google. Ng’s big breakthrough was to increase the layers and the number of neurons in the neural network, and then run massive amounts of data through the network to train it, specifically images from 10 million YouTube videos. The “deep” in Deep Learning is indicative of the large number of layers in such neural networks. The Google Brain project resulted in a neural network trained using Deep Learning algorithms on 16,000 CPU cores. This system had learned to recognize concepts, such as “cats”, from watching YouTube videos, and without ever having been told what a “cat” is. The neural network “saw” correlation between visual images of a cat, visual images containing the word “cat”, and audio mentions of the word “cat”, and learnt this correlation as knowledge, quite like how a small child might.

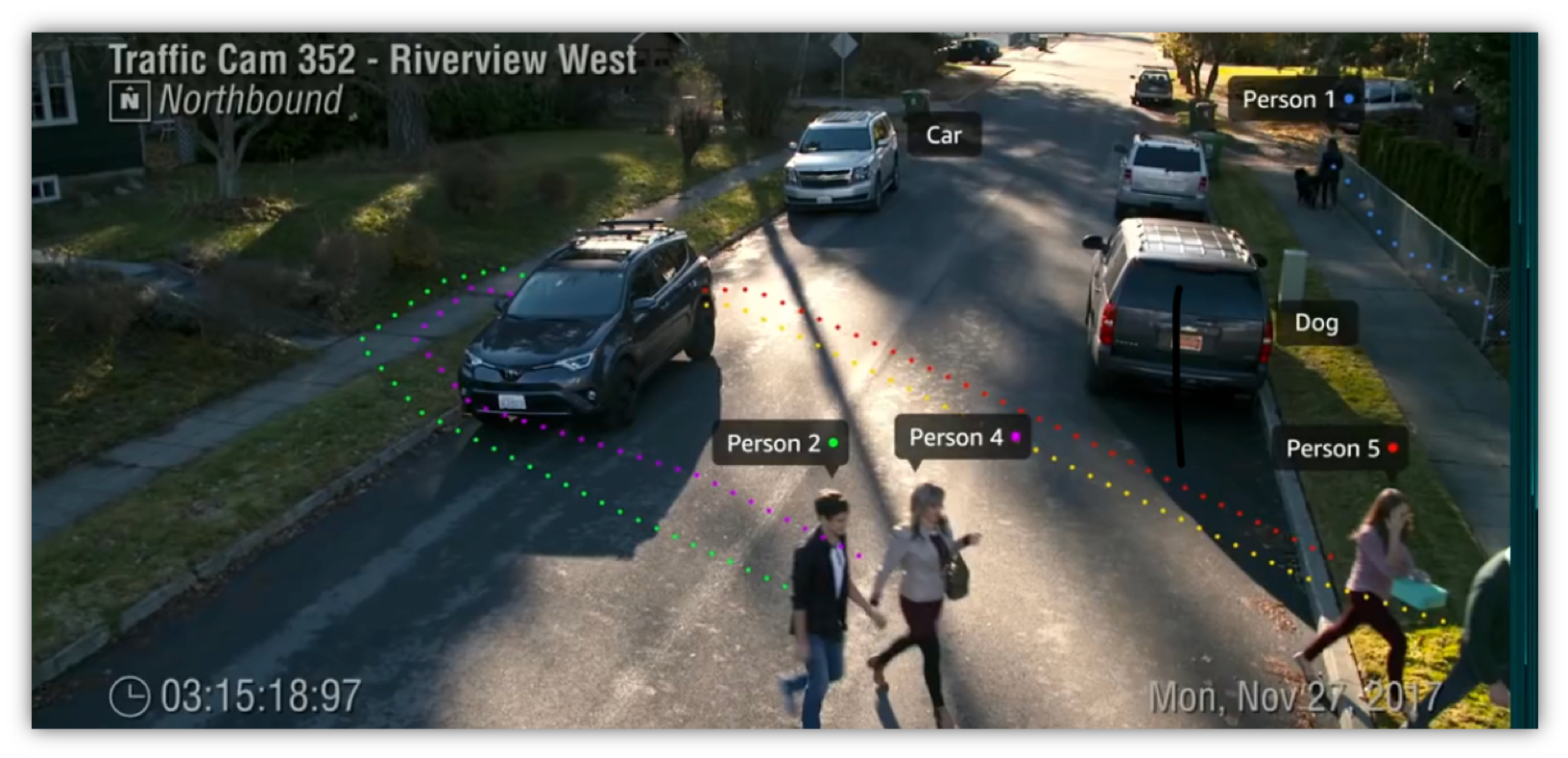

Today, image recognition via Deep Learning is often better than humans, with a variety of applications including autonomous vehicles, identifying cancer in blood and tumors in MRI scans. There are also many variations of Deep Learning being actively used and improved. Some of these models can be stacked (“operated one-after-other”) to result in more advanced classification capabilities. The pictures below are from demos of Amazon’s Rekognition System that is able to recognize objects, faces and context in images and streaming video using deep learning.

(image credits: Amazon AWS)

Amazon has made it quite straightforward to build fairly complex intelligent applications, particularly in the context of images and videos, using advanced machine learning algorithms via APIs and modules that are available in AWS. If you are interested in this, please take a look at AWS’s machine learning page and check out the various samples and demos.

Are such systems intelligent? Since Deep Learning and other advanced ML algorithms really learn and get quite knowledgable and accurate in their capabilities within their domain, they do possess two of the key components of intelligence, as we defined it earlier in this blog series.

Do such systems know how to apply their knowledge to problem solving? Our Narrow AI systems currently require human intervention to tie them into real-world problem solving workflows, interfacing them with traditional systems and other humans. Human insight is necessary to imagine the combination of a traffic camera system that detects and tracks persons and objects (like the example from AWS Rekognition above) with other face detection and general image detection systems, and training image data available from the California DMV’s driver license and automobile license plate databases.

When such a system is installed in our public areas, we might be able to greatly increase our communities’ crimefighting abilities. Just, imagine the force-multiplier effect possible here, the potential for increase in effectiveness and speed in activites that our police, security personnel and investigators do every day! Armed with Narrow AI of this type, a group of humans charged with a particular task can certainly become much more effective.

The relationship between AI, Machine Learning (ML), Expert Systems, and Deep Learning can also be understood in reference to the Venn diagram below.

In the last and final part of this blog, we’ll discuss how intelligent algorithms based on Deep Learning can be used to solve many important problems in cybersecurity.