October 18, 2022

Do we need to rethink our approach to what the financial services sector calls operational risk or non-financial risk? Operational risk can be defined as “the risk that a firm’s internal practices, policies and systems are not adequate to prevent a loss being incurred, either because of market conditions or operational difficulties.” In particular, I’m going to consider IT risk, but I believe much of what I’m going to talk about applies to other risk types.

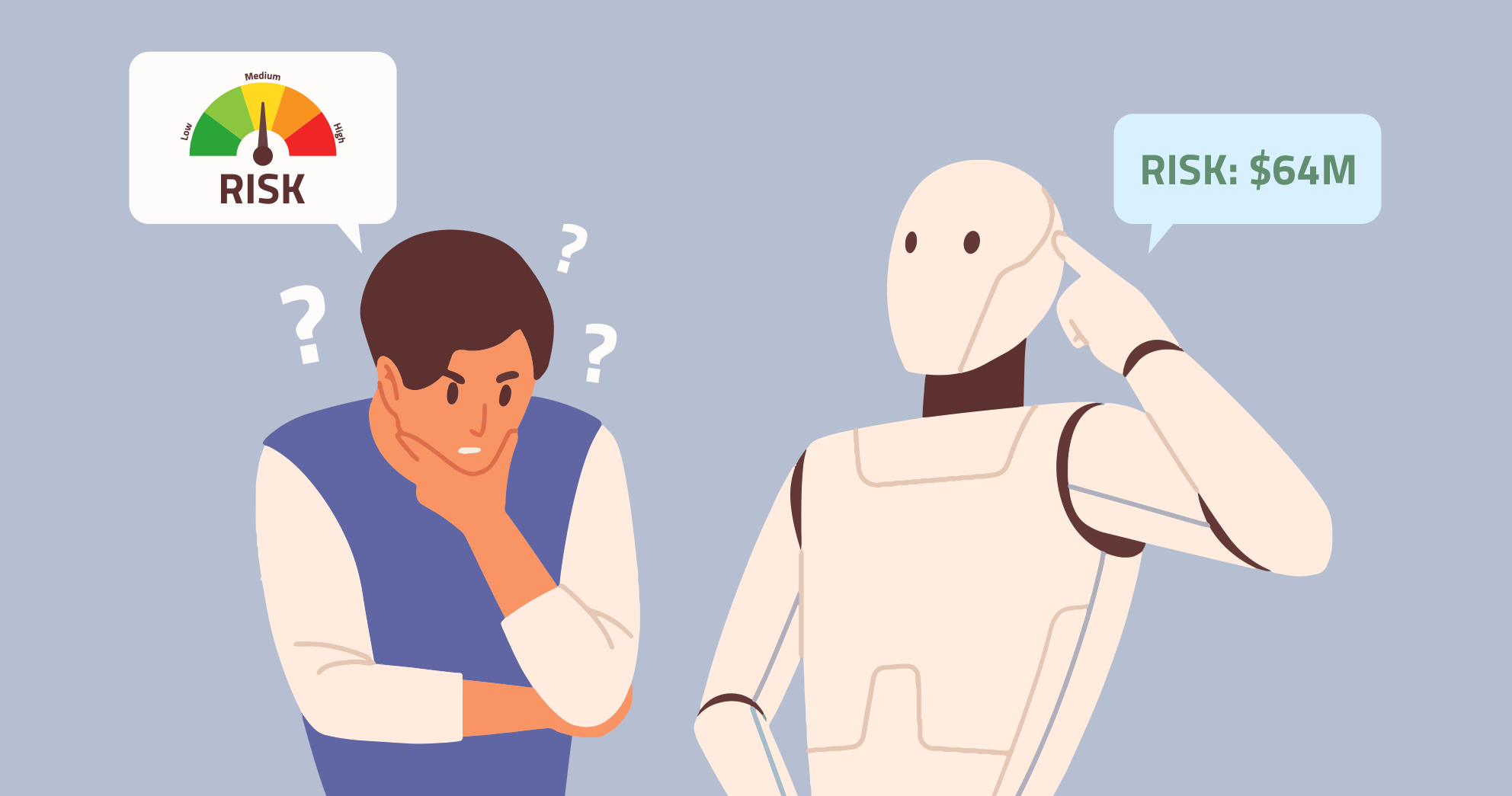

So let’s get straight into it. A traditional approach to managing operational or non-financial risk is likely to center on a risk register, each risk is recorded, assessed and subject to a periodic review. This can be done annually or more frequently, and ideally would be reviewed if some material information came to light that the current assessment is now invalid (due to a new incident, new business, or the completion of material mitigation). The assessment of the severity or prioritization of the risk is often completed against some form of grid: 4×4, 4×5, 5×5, etc. See below for an example:

Each axis will have a definition as to how likelihood is classified and definitions for how impact is classified. Impact usually includes financial impact as well as other impact types.

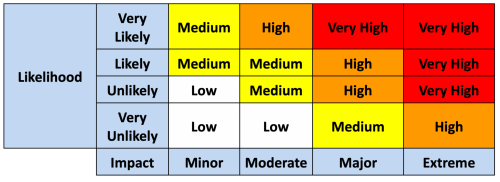

When a risk is assessed, it will usually be assessed inherently first (i.e. before the application of mitigating controls). The degree of mitigating impact of controls (reduction in likelihood or impact, or both) is then assessed to give an assessment of the residual risk levels. If the residual risk is unacceptable, a set of issues and actions may be recorded with the aim of bringing the risk to an acceptable level once these are completed.

All pretty straightforward. But in my experience it’s not quite as straightforward as it seems.

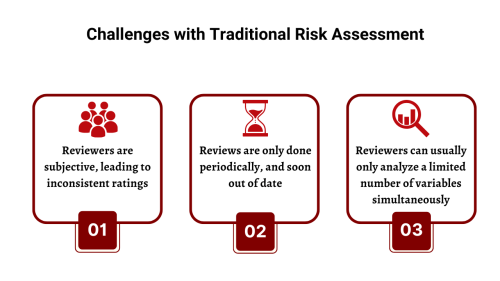

Often these assessments are completed with a group of subject matter experts, and it is left to the opinion of these experts as to the level of inherent risk and the mitigating effect of controls (if any). But what happens if the experts disagree? What if one expert thinks the combination of mitigating controls will reduce the likelihood from “Very Likely” to “Very Unlikely”, but another disagrees suggesting that they only reduce it to “Unlikely”. And when it comes to remediation, how much improvement in the control environment is good enough? Will addressing a specific issue or undertaking a specific action improve the risk assessment?

How do you know which SME is correct? How do you maintain consistency in the assessment unless the same expert completes the assessment across the whole group. It is easy enough for one person to do a complete assessment in a small firm, but it’s more difficult to do so in a multinational. The difficulty is that, even with trained assessors, governance and assurance, it’s still a subjective assessment.

It is also a point in time assessment. Even if the subject matter experts are able to gather the latest information, the chances are that unless the risk exposure is extremely static it will likely be out of date shortly after completion. I guess this could be ok if your business decision making cycle is also slow. If you are purely using it to inform an annual investment cycle it kind of makes sense. Nevertheless, I still have my doubts.

Human beings have limitations. Generally, we are only capable of optimizing four or five variables to optimize one output. If we take IT risk as a major component of operational risk (given most organizations now rely heavily on IT) the task would be overwhelming: we have huge operating environments with vast numbers of assets generating endless variables we would need to assess to accurately understand our risk position. In most large firms, is it possible or fair to ask individuals to subjectively assess the current risk exposure with any degree of certainty?

Steps have been made to bring more objectivity into the assessment. You can narrow the guide rails, as it were, by giving a more prescriptive guide to the assessment. For example, if you have ‘x’ in place (and it is working), this will reduce your likelihood or impact by ‘y’. There are a number of models around which do this and you could also argue that this is the approach used by both NIST and ISO27001 in their maturity assessments for controls.

Nevertheless, whilst a step forward they are still point in time assessments, and I would argue, and have argued, that the IT environment these days is highly dynamic (new products, new technologies, new threats, new vulnerabilities). Even within a few days or weeks the risk exposure could change. If we are to optimize our risk profile (ideally against our spend), we’re going to need some help in managing the variables.

What if it was possible to continuously gather live data, and combine that data with other sources such that you could build objective risk algorithms to continuously calculate your risk exposure? What if you then added additional optimisation algorithms to enable you to focus and prioritize remediation activity to accurately address risk exposure with the added benefit that you could see exposure come down as the remediation is achieved (with no SMEs arguing about the mitigating effect of the action you’ve just closed)? Would this not be a game changer?

A capability like this would not only address the subjectivity inherent in current approaches, it deals with the point-in-time issue. It would also help those trying to manage risk be able to optimize the myriad of variables they face across multiple requirements, not least of which is likely to be cost.

For cyber risk, Balbix’s Cyber Risk Quantification solution is showing that the capability to do much of this exists now. The computing power to sift through and process petabytes of data in order to optimize risk exposure is a reality.

So, if such capability does exist, is it now time to reconsider the subjective, point-in-time 4×5 box grid?